BalanceAI Decentralized AI Node Setup

Linux Version

Prerequisites

This tutorial targets users who are interested in running BalanceAI Node. Prior knowledge of using operating system command line tools and Docker is recommended.

It is recommended to read the following tutorials:

In order to be able to run BalanceAI node, your machine has to have public IP address and your firewall should have port that the node exposes opened (e.g. 8081)

The following tutorial described the process for Linux operating system only. If you are running other system please see other sections.

Setup Environment

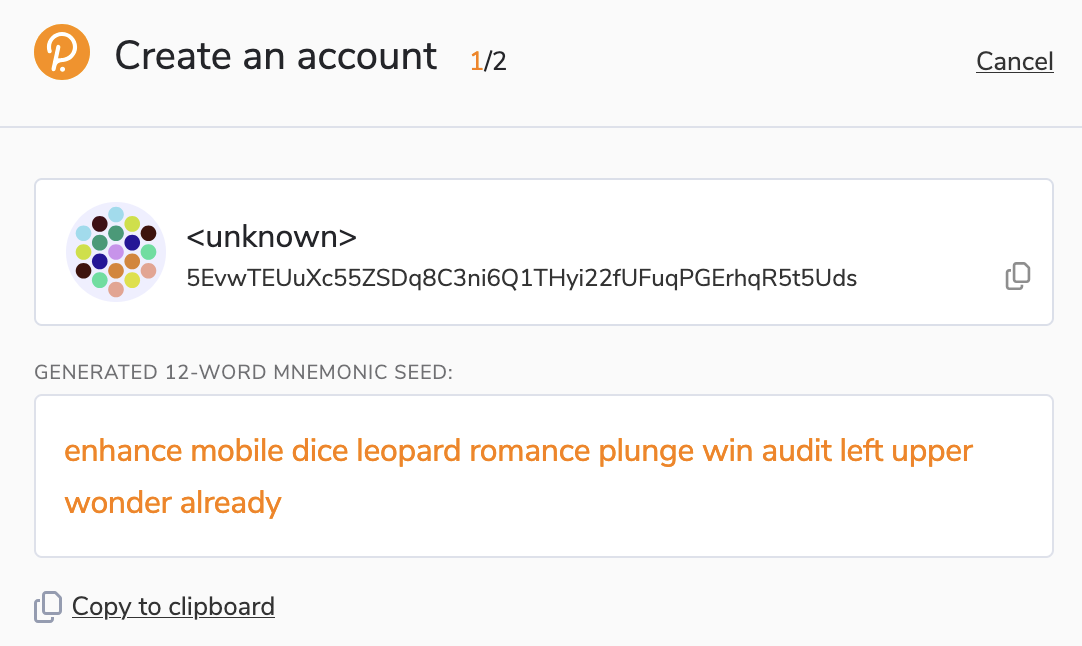

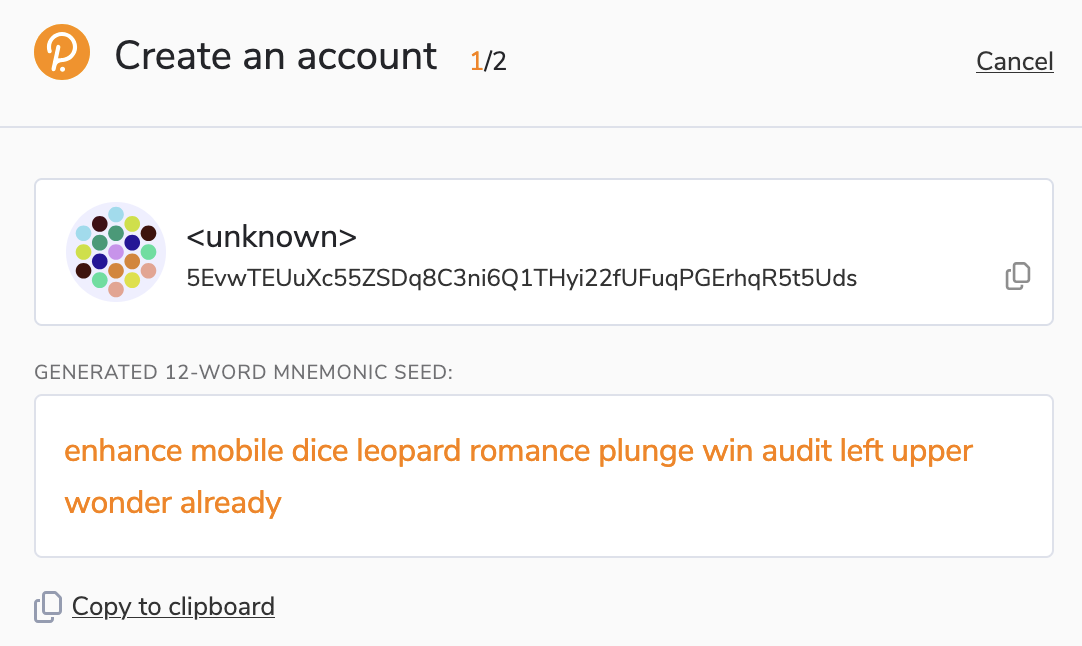

Create Node Wallet

Create a dedicated wallet for your node. How to create wallet

Bear in mind that you have to note the seed phrase. It will be needed in the configuration file of the node.

Fund the newly created wallet with BAI (it should at least have 1100 BAI)

Install Docker

Docker is a containerization platform that allows you to package, ship, and run applications in lightweight, portable, and isolated containers. These containers provide a consistent and reliable way to deploy applications across different environments, such as development, testing, staging, and production, without requiring modifications to the underlying infrastructure. With Docker, developers can focus on writing code without worrying about infrastructure or compatibility issues, and teams can collaborate more effectively by working on different parts of an application independently.

Install Docker based on your Operating System:

You do NOT need an account for Docker. Do NOT login to docker hub, just use commands described below.

Install Ollama

Ollama is a software tool for running LLM (large language models). BalanceAI Node needs a LLM connection.

Download Llama model

Open a new terminal windows and run the following command to download and run the llama3 model

ollama run llama3.1

More info in the guide here: https://medium.com/@manuelescobar-dev/running-llama-3-locally-with-ollama-9881706df7ac

Setup Node

Configuration

Create a folder for your node. Inside the folder create a file names .env

Example .env file is shown below:

MODEL=ollama/llama3.1

MODEL_URL=http://localhost:11434

MAX_RPM=20

NODE_SEED=one two three

NODE_NAME=Node1

NODE_HOST=https://agents-vm-node.my.network:8081

NODE_LOCAL_HOST=0.0.0.0

NODE_PORT=8081

SERPER_API_KEY=11111111111

RPC=TESTNET

You have to configure each element based on your parameters.

- NODE_SEED - provide seed to the wallet you want to use for your node

- NODE_NAME - provide a name of your node

- NODE_HOST - it should be an url your service will run on. E.g. your DNS or IP address following by NODE_PORT value

- SERPER_API_KEY - register a key here: https://serper.dev/signup

- RPC - BalanceAI environment, currently only TESTNET is available

Make sure the following variables are configured as below:

MODEL=ollama/llama3.1

MODEL_URL=http://localhost:11434

MAX_RPM=20

NODE_LOCAL_HOST=0.0.0.0

NODE_PORT=8081

RPC=TESTNET

NODE_SEED

Provide a seed from the account creation step:

NODE_NAME

Any name that identifies your node. E.g. MyNode

NODE_HOST

Provide a DNS host name to your server. If you do not have one use the IP address.

You can use the following service to get the name of your IP address: https://www.showmyip.com/

SERPER_API_KEY

Register a key here: https://serper.dev/signup

Test if the configuration is done properly.

Open a command line/terminal and check the configuration by running the following command

docker run -it --env-file .env --platform linux/amd64 balanceai/agentvm info

You should see something similar to:

BalanceAI AgentVM Node version: 0.9

RPC : TESTNET

Node Name: Node1

Node Wallet Address: ix5RiRRDJzWHDJzwQvwhtTQ4EXBDEdfLGDHtxRgtcZfG94V8y

Node API URI: https://agents-vm-node.my.network:8081

LLM NOT available, Please configure it.

Node NOT registered.

Once confirmed a node software is working. Configure your LLM model.

LLM

BalanceAI Node needs a LLM connection.

Default LLM for BalanceAI node is Llama.

If your are not running ollama with desired model (Llama 3.1) the info command shows:

LLM NOT available, Please configure it.

As in the output from info command:

BalanceAI AgentVM Node version: 0.9

RPC : TESTNET

Node Name: Node1

Node Wallet Address: ix5RiRRDJzWHDJzwQvwhtTQ4EXBDEdfLGDHtxRgtcZfG94V8y

Node API URI: https://agents-vm-node.my.network:8081

LLM NOT available, Please configure it.

Node NOT registered.

Configure LLM model by opening terminal/command line.

If you already downloaded the model as described in the Download Llama model section, type

ollama serve

otherwise type:

ollama run llama3.1

Check if the model is properly configured by typing:

docker run -it --env-file .env --platform linux/amd64 balanceai/agentvm info

You should see that the model is available (LLM is available) in the response:

BalanceAI AgentVM Node version: 0.9

RPC : TESTNET

Node Name: Node1

Node Wallet Address: ix5RiRRDJzWHDJzwQvwhtTQ4EXBDEdfLGDHtxRgtcZfG94V8y

Node API URI: https://agents-vm-node.my.network:8081

LLM is available

Node NOT registered.

Registering a node

Once all parameters are configure via .env file and the LLM model is connected, you need to register your node in the BalanceAI chain.

Your node account should have at least 1100 BAI in order to pay the transaction fees and send the registration deposit.

Register your node using the following command:

docker run -it --env-file .env --platform linux/amd64 balanceai/agentvm register

Node command should return:

Sucesfully registered

You can check if the node is registered by running the following command:

docker run -it --env-file .env --platform linux/amd64 balanceai/agentvm info

Run a node

Once registered you can run your node. You can do this by typing the following command:

docker run -it --env-file .env --platform linux/amd64 --network host balanceai/agentvm run

The following command works on Linux environment only. If you are running Windows or Mac other guides.

You should see the following output:

Running Node1 ...

INFO: Uvicorn running on http://0.0.0.0:8081 (Press CTRL+C to quit)

INFO: Started parent process [96160]

INFO: Started server process [96191]

INFO: Started server process [96192]

INFO: Started server process [96189]

Congratulations, your node is successfully providing resources to BalanceAI Network

📄️ Nodes Incentives

Incentives for running a node

📄️ Running a node - Linux

Linux Version

📄️ Running a node - MacOS

MacOS Version

📄️ Running a node - Windows

Windows Version

📄️ FAQ

FAQ