System Architecture

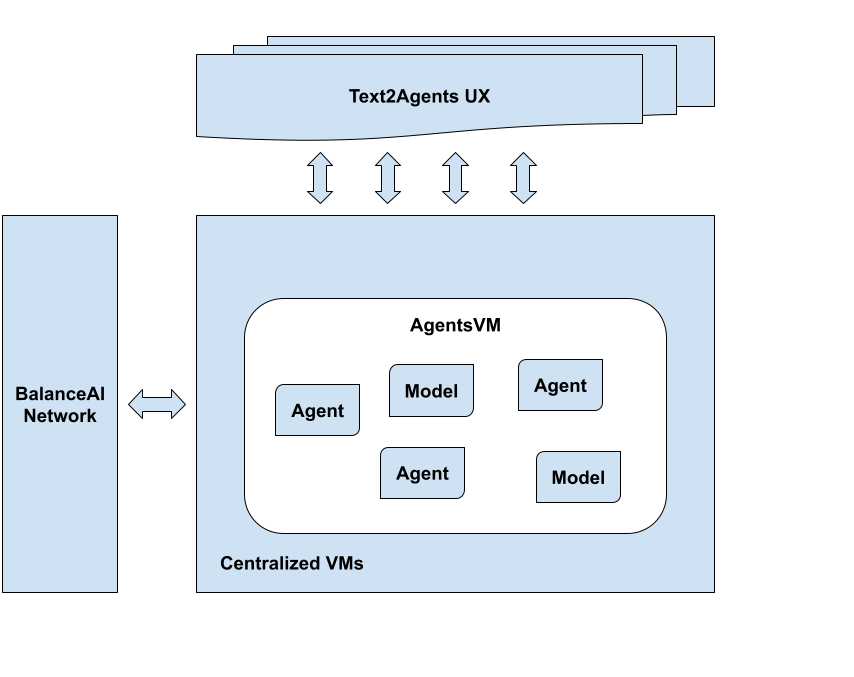

Current setup

Currently, our system architecture revolves around a centralized virtual machine (VM) setup, where all agents and models are hosted on one or more central servers. This ensures consistency in environments and reliable access for all operations but limits scalability as the number of users grows.

In the future (V1), we plan to transition to a distributed system architecture. Under this model, each agent and model will run independently on user-owned devices (nodes). This decentralized approach allows:

- Scalability: Supporting exponentially more users without central server strain.

- Flexibility: Users can access their own hardware for tasks, optimizing resource usage.

- Performance: Eliminating centralized bottlenecks to ensure faster processing.

This transition is intentional and driven by the growing complexity of AI tasks and organizational needs.

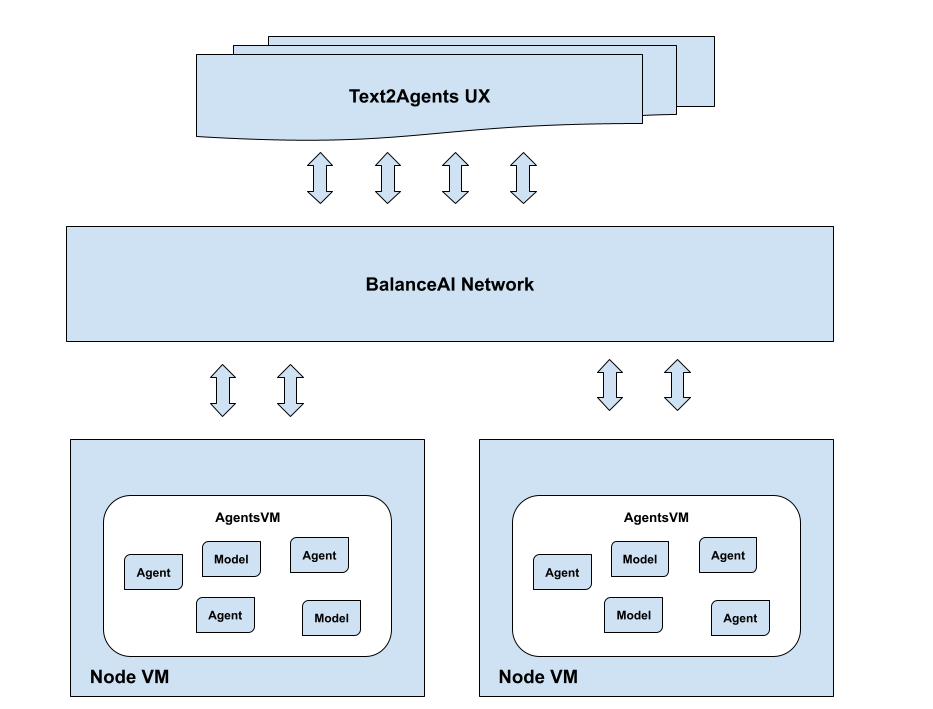

V1 System Architecture

In a distributed system architecture, each agent and model runs independently on dedicated nodes or machines owned by network users.

Every BalanceAI network user would be able to run their own node and contribute to computational power of the network.

This approach offers significant advantages over centralized architectures:

-

Decentralized Execution: Agents and models run autonomously on their respective nodes, allowing them to access local resources such as:

- Computational Resources: Dedicated CPU, GPU, or other processing units.

- Memory: Optimized for each node's specific needs without interference from others.

-

Scalability: The system can scale horizontally by adding more user-owned nodes to handle increased workloads efficiently.

-

Flexibility: Users retain control over their hardware, enabling them to deploy agents and models on the devices that best suit their requirements (e.g., laptops for mobility or high-performance servers).

-

Network Utilization: Nodes can leverage their local network bandwidth for data exchange, reducing latency compared to centralized setups.

-

Security: User-owned nodes enhance security by isolating tasks within trusted environments, minimizing potential breaches.

-

Performance Optimization: Each node's hardware is optimized for specific tasks, improving overall system efficiency and response times.

This architecture is designed to evolve with the demands of AI computing, offering scalability, flexibility, and enhanced performance while maintaining user privacy and control.